Or leave us a private message

Inconsistent JSON Data Type Handling in Warewolf Workflow Output, Breaking PowerApps

Inconsistent JSON Data Type Handling in Warewolf Workflow Output, Breaking PowerApps

When executing a workflow in Warewolf that retrieves data from a SQL Server database and generates a JSON output, the application modifies the data types in the JSON payload. This behavior leads to inconsistencies that break PowerApps integration, as PowerApps requires consistent data types for proper functioning.

The key issues observed are:

- String Fields:

- String fields (

FldVarchar) are sometimes output as numbers when the data from SQL is numeric (e.g.,1instead of"1"). This results in inconsistent data types, causing errors when PowerApps processes the JSON.

- String fields (

- Float Fields:

- Float values (

FldFloat) are being formatted with a locale-specific decimal separator (",") instead of the JSON standard dot ("."). For example,1.123in SQL is output as"1,123", which PowerApps cannot interpret as a valid numeric value.

- Float values (

- Null Values:

- Null values in the database are being output as empty strings (

"") in the JSON payload for both string and numeric fields. PowerApps expects numeric fields to usenullto signify missing data, and empty strings cause parsing errors or unexpected behavior.

- Null values in the database are being output as empty strings (

Steps to Reproduce:

- Create a stored procedure in SQL Server that returns a dataset containing strings, numbers, floats, and nulls:

sqlCopyEdit

Create Procedure usp_GetValues AS Declare @TempTable TABLE ( FldVarchar varchar(10), FldNumber int, FldFloat float ) Insert into @TempTable Values('String', 1, 1.123), ('1', 1, 1.123), (null, null, null) Select * from @TempTable - Use the Warewolf Low Code tool to create a workflow that calls the stored procedure using the SQL Server Database connector.

- Map the output fields (

FldVarchar,FldNumber,FldFloat) to a recordset in the workflow. - Run the workflow and observe the JSON output.

- Attempt to integrate the JSON output with PowerApps.

Expected Behavior:

FldVarcharshould consistently output string values (e.g.,"String"or"1").FldFloatshould use a dot (".") as the decimal separator (e.g.,1.123instead of"1,123").- Null values should be represented as

nullin the JSON payload, not as empty strings ("").

Actual Behavior:

FldVarcharalternates between string and numeric data types depending on the SQL value, breaking PowerApps' ability to process the field.FldFloatuses a comma as the decimal separator, which PowerApps cannot parse as a number.- Null values are converted to empty strings, causing errors or unexpected behavior in PowerApps workflows.

Impact on PowerApps:

PowerApps relies on consistent and standard JSON data types for proper functionality. The inconsistent handling of data types in Warewolf is breaking workflows and causing significant delays in application development and deployment.

Proposed Solution:

- Ensure that Warewolf outputs consistent data types in JSON:

- Always serialize strings as strings.

- Maintain numeric values in their standard JSON representation (e.g., dot as the decimal separator).

- Represent null values correctly as

null.

- Provide an option in Warewolf to enforce strict type adherence when generating JSON outputs.

Attachments:

- Screenshot of the Warewolf workflow.

- Example of the problematic JSON output:

jsonCopyEdit

{ "Values": [ { "FldFloat": "1,123", "FldNumber": 1, "FldVarchar": "String" }, { "FldFloat": "1,123", "FldNumber": 1, "FldVarchar": 1 }, { "FldFloat": "", "FldNumber": "", "FldVarchar": "" } ] } - Expected JSON output:

jsonCopyEdit

{ "Values": [ { "FldFloat": 1.123, "FldNumber": 1, "FldVarchar": "String" }, { "FldFloat": 1.123, "FldNumber": 1, "FldVarchar": "1" }, { "FldFloat": null, "FldNumber": null, "FldVarchar": null } ] }

POST tool returning error

POST tool returning error

Im getting an error when using the post tool to a server that requires the Accept header:

"The 'Accept header must be modified using the appropriate property or method.

Parameter name: name"

The API setup was working properly before and this error started when I think the API started using the header on their side. Either way, its a coding issue on our end.

This is one Im calling: Investec programable banking, SA PB Account Information : Transfer Multiple v2

Its probably too dificult to setup on your end so happy to help with this where needed.

WW 2.8.1.21 - Trigger using PreFetch function doesn't process all Rabbit Message

WW 2.8.1.21 - Trigger using PreFetch function doesn't process all Rabbit Message

Good day,

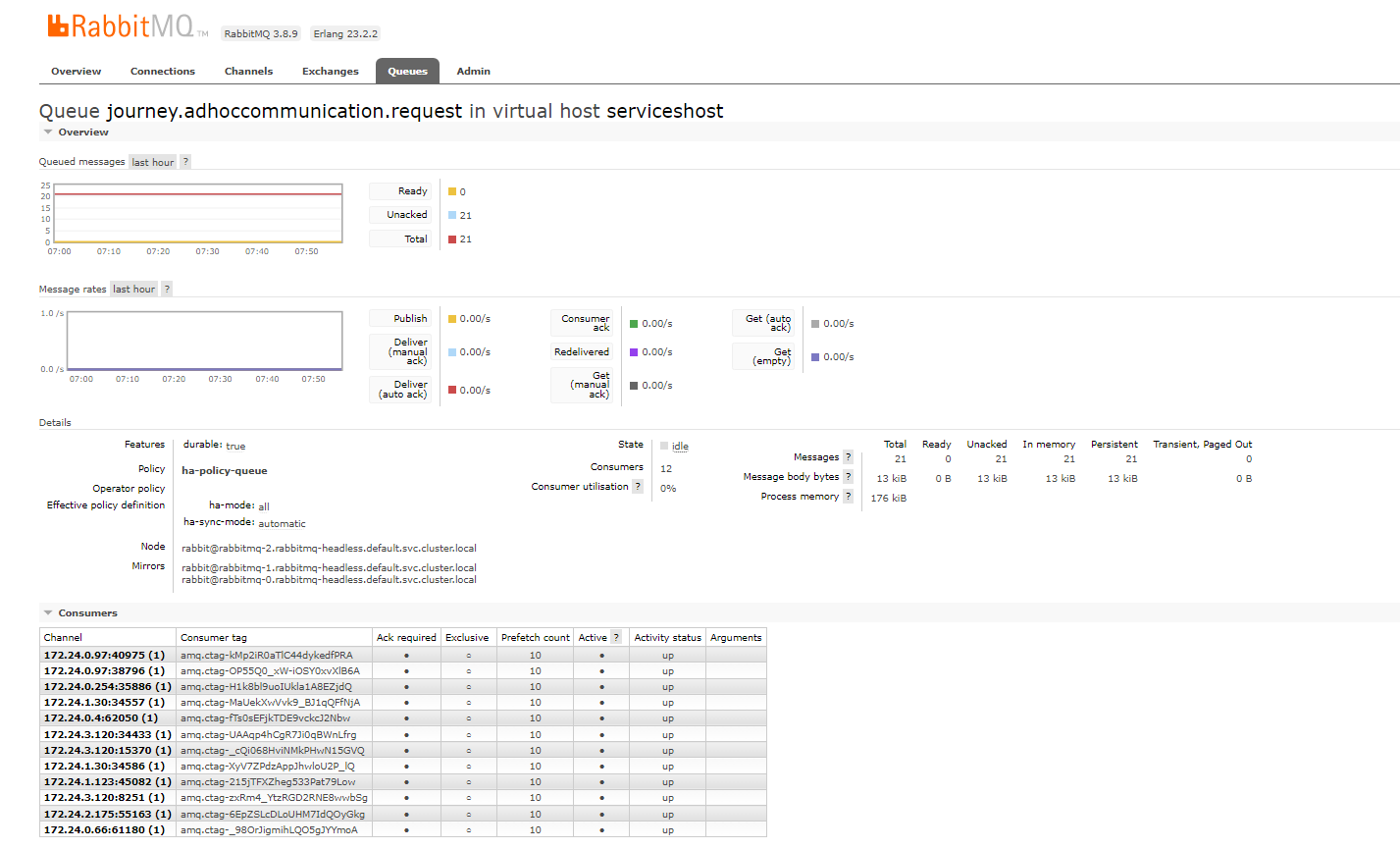

I recently monitored RabbitMQ while the Ad Hoc Journey was executing. After the Ad Hoc Journey finished executing (confirmed by looking at the ServerLog) I can still see unack'ed messages in the relevant Rabbit queue.

I've seen it happen on a number of occasions and I'm wondering if the PreFetch function while running multiple containers (12 in the example below) doesn't lock some messages (21 as per the example below) as per screenshot below:

When turning off trigger exception is raised in Warewolf log

When turning off trigger exception is raised in Warewolf log

I am getting the following error when turning queues off. The queue was at a concurrency set as 1 then when I changed it to 0 and saved it errored in the Warewolf log.

2022-11-06 17:28:10,817 INFO - [Warewolf Info] - Save Trigger Queue Service

2022-11-06 17:28:11,323 ERROR - [Warewolf Error] - TriggersCatalog - Load - C:\ProgramData\Warewolf\Triggers\Queue\52e4dba3-73fa-4267-877a-fa4f493a1511.bite

System.Security.Cryptography.CryptographicException: Key not valid for use in specified state.

at System.Security.Cryptography.ProtectedData.Unprotect(Byte[] encryptedData, Byte[] optionalEntropy, DataProtectionScope scope)

at Warewolf.Security.Encryption.DpapiWrapper.Decrypt(String cipher)

at Dev2.Runtime.Hosting.TriggersCatalog.LoadQueueTriggerFromFile(String filename)

at Dev2.Runtime.Hosting.TriggersCatalog.Load()

2022-11-06 17:28:11,332 ERROR - [Warewolf Error] - TriggersCatalog - Load - C:\ProgramData\Warewolf\Triggers\Queue\667e5af4-c5a8-461b-b03d-c5683fe1fca0.bite

System.Security.Cryptography.CryptographicException: Key not valid for use in specified state.

at System.Security.Cryptography.ProtectedData.Unprotect(Byte[] encryptedData, Byte[] optionalEntropy, DataProtectionScope scope)

at Warewolf.Security.Encryption.DpapiWrapper.Decrypt(String cipher)

at Dev2.Runtime.Hosting.TriggersCatalog.LoadQueueTriggerFromFile(String filename)

at Dev2.Runtime.Hosting.TriggersCatalog.Load()

2022-11-06 17:28:11,340 ERROR - [Warewolf Error] - TriggersCatalog - Load - C:\ProgramData\Warewolf\Triggers\Queue\9923dc51-c138-4944-a7a4-06cb468f2e86.bite

System.Security.Cryptography.CryptographicException: Key not valid for use in specified state.

at System.Security.Cryptography.ProtectedData.Unprotect(Byte[] encryptedData, Byte[] optionalEntropy, DataProtectionScope scope)

at Warewolf.Security.Encryption.DpapiWrapper.Decrypt(String cipher)

at Dev2.Runtime.Hosting.TriggersCatalog.LoadQueueTriggerFromFile(String filename)

at Dev2.Runtime.Hosting.TriggersCatalog.Load()

2022-11-06 17:28:11,343 INFO - [00000000-0000-0000-0000-000000000000] - Trigger restarting 'f00a48f4-5348-490b-add4-3991c578c717'

2022-11-06 17:28:11,359 ERROR - [ at System.Diagnostics.Process.Kill()

at Warewolf.OS.ProcessMonitor.Kill()] - Access is denied

2022-11-06 17:28:11,371 INFO - [WarewolfLogger.exe] - Logging Server OnError, Error details:Unable to read data from the transport connection: An existing connection was forcibly closed by the remote host.

2022-11-06 17:28:11,372 INFO - [WarewolfLogger.exe] - 11/6/2022 5:28:11 PM [Debug] Error while reading System.IO.IOException: Unable to read data from the transport connection: An existing connection was forcibly closed by the remote host. ---> System.Net.Sockets.SocketException: An existing connection was forcibly closed by the remote host

2022-11-06 17:28:11,378 INFO - [WarewolfLogger.exe] - at System.Net.Sockets.Socket.EndReceive(IAsyncResult asyncResult)

2022-11-06 17:28:11,378 INFO - [WarewolfLogger.exe] - at System.Net.Sockets.NetworkStream.EndRead(IAsyncResult asyncResult)

2022-11-06 17:28:11,378 INFO - [WarewolfLogger.exe] - --- End of inner exception stack trace ---

2022-11-06 17:28:11,379 INFO - [WarewolfLogger.exe] - at System.Net.Sockets.NetworkStream.EndRead(IAsyncResult asyncResult)

2022-11-06 17:28:11,379 INFO - [WarewolfLogger.exe] - at System.Threading.Tasks.TaskFactory`1.FromAsyncCoreLogic(IAsyncResult iar, Func`2 endFunction, Action`1 endAction, Task`1 promise, Boolean requiresSynchronization)

2022-11-06 17:28:11,390 INFO - [Warewolf Info] - queue process died: ProfileCustomerBackBook(f00a48f4-5348-490b-add4-3991c578c717)

Assigning recordsets within ForEach not working correctly

Assigning recordsets within ForEach not working correctly

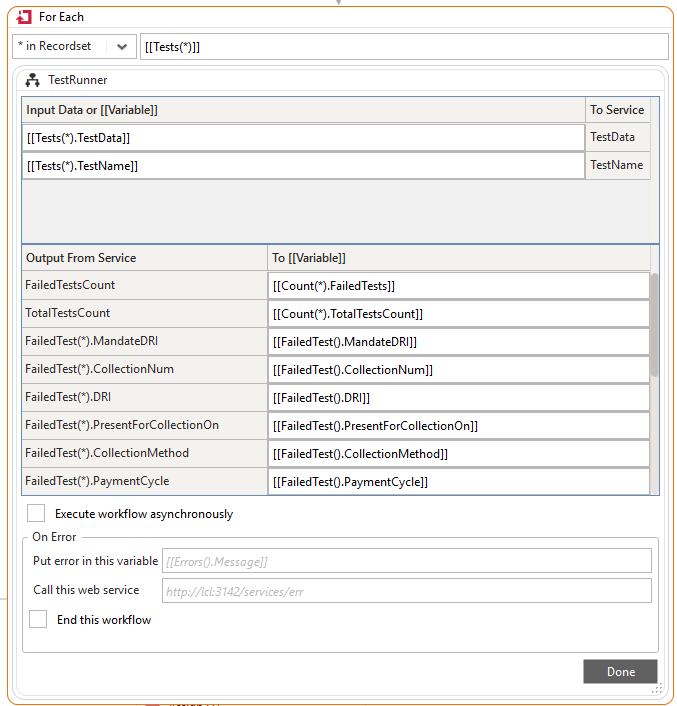

In the for-each, there is a new record set being appended from a scalar variable output.

And then I attempt to sum up the values in the recordset. It fails with an invalid calculate field. Please see the debug output - there should only be one record in the recordset.

RabbitMQ Consumer Prefetch value

RabbitMQ Consumer Prefetch value

Hi Team, there is one suggestion to improve RabbitMQ consumer performance. We can divide the load with multiple consumer by specifying prefetch count value. If we are not specifying prefetch value that means single consumer have unlimited buffer means single consumer will pick all the data from queue.

Prefetch meaning "prefetch simply controls how many messages the broker allows to be outstanding at the consumer at a time. When set to 10, this means the broker will send 10 message, wait for the ack, then send the next."

References :

https://www.rabbitmq.com/consumer-prefetch.html

https://www.cloudamqp.com/blog/how-to-optimize-the-rabbitmq-prefetch-count.html

https://stackoverflow.com/questions/65201334/rabbit-mq-prefetch-undestanding

Suggestion : Add prefetch configuration in trigger section of warewolf studio (i.e like trigger concurrency)

Please let me know if you need more help to understand.

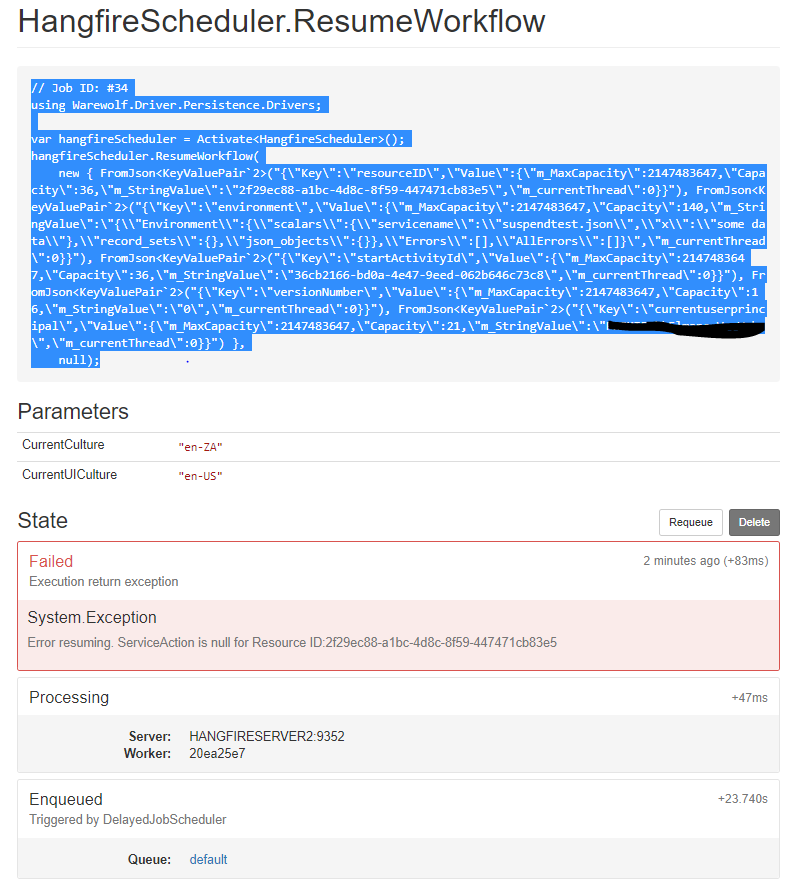

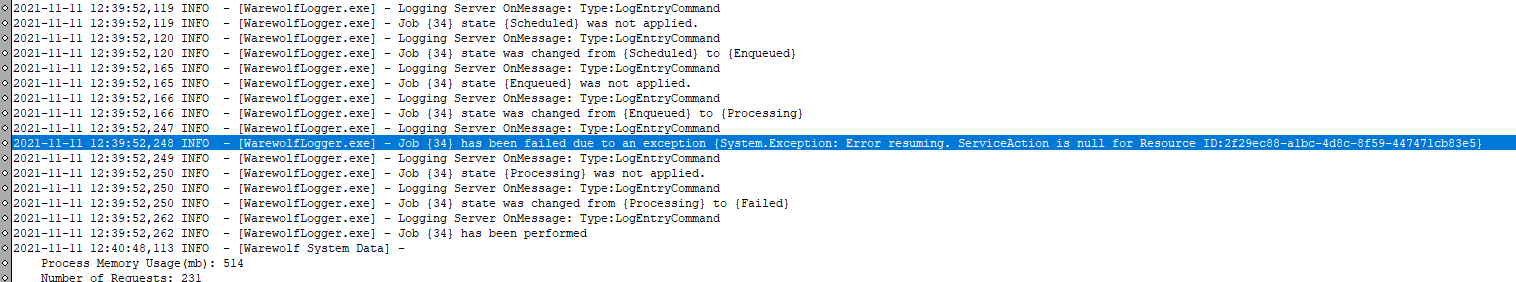

UAT-2.7.5.0 - Getting hangfire error at the time of resume suspend tool

UAT-2.7.5.0 - Getting hangfire error at the time of resume suspend tool

PriceIncreaseByMandate.bite

newjourney (2).log

In local environment, sometime it gives "Resume node not found" error. but After restart of warewolf service. it is working fine. Price increase journey have 2 suspend tool in workflow but both are working fine in local environment. But on Dev environment it is failing Randomly. Out of 10 it is failing almost 7 times. I am providing here, workflow and server log.

Yes it new workflow.

Hangfir giving below error:

{"FailedAt":"2022-04-18T05:28:31.2801372Z","ExceptionType":"System.InvalidOperationException","ExceptionMessage":"Error resuming. ServiceAction is null for Resource ID:ae105df3-9dc5-437e-ba21-e93dd6a0f356","ExceptionDetails":"System.InvalidOperationException: Error resuming. ServiceAction is null for Resource ID:ae105df3-9dc5-437e-ba21-e93dd6a0f356 ---> System.Exception: Error resuming. ServiceAction is null for Resource ID:ae105df3-9dc5-437e-ba21-e93dd6a0f356\r\n --- End of inner exception stack trace ---\r\n at HangfireServer.ResumptionAttribute.OnPerformResume(PerformingContext context) in Y:\\WOLF-INST-COMREL\\Dev\\Warewolf.HangfireServer\\ResumptionAttribute.cs:line 94\r\n at Hangfire.Profiling.ProfilerExtensions.InvokeAction[TInstance](InstanceAction`1 tuple)\r\n at Hangfire.Profiling.SlowLogProfiler.InvokeMeasured[TInstance,TResult](TInstance instance, Func`2 action, String message)\r\n at Hangfire.Profiling.ProfilerExtensions.InvokeMeasured[TInstance](IProfiler profiler, TInstance instance, Action`1 action, String message)\r\n at Hangfire.Server.BackgroundJobPerformer.InvokePerformFilter(IServerFilter filter, PerformingContext preContext, Func`1 continuation)"}

For each loop issue: All Recordset index values not passing into child workflow

For each loop issue: All Recordset index values not passing into child workflow

Hi,

I have noticed that All the values of recordset are not passing into child workflow for each loop. I have checked this same behavior into the Select & Apply tool and it's working fine.

Please check the sample attached workflows & issue into screenshot.

forloop_recordset_all_values_not_pass_issue....png

ForLoopIssue-MainWorkflow (1).bite

ForLoopIssue-ChildWorkflow (1).bite

It looks like the For Each is getting confused with 2 different recordset inputs: User(*) and Benefit(*)

Gandalf 5 months ago

Change For Each to use No. of Executes = 3 and see different result. This is from the tool handeling the replacement of * differently and incorectly.

Yogesh Rajpurohit yesterday at 9:13 a.m.We have checked and found that the sequence of BenifitID in User Payload is incorrect so we changed the sequence and checked the result. Output is coming properly.

Attached are the Edited Workflows.

ForLoopIssue-ChildWorkflow (2).bite

ForLoopIssue-MainWorkflow (2).bite

Suspend Tool Failing

Suspend Tool Failing

There is a problem with the suspend tool. I have two workflows created in 2.6.6.0, one works fine and the other does not.

Works fine: SuspendToolTest

Does not work: suspendtest

suspendtest.bite

SuspendToolTest.bite

Date Time and Debug not correct

Date Time and Debug not correct

Please see attached workflow. When passing the following JSON as input:

{

"dateTime": "2020-04-14T09:20:18.982Z"

}

Even though the JSON is valid and I have specified the DateTime Input Format correctly when I debug I get an error that the Input String is not in the correct format. However when I 'View in Browser' it does not show an error. Furthermore the debug input of the tool does not show the input due to the error.

PS. I got this to work by making the input format my current system format as it turned out, internally the Newtonsoft de-serializer interprets the DateTime into the system format. This is however hidden from user and would be difficult to find.

I think showing the Debug Input values would help the user see what value was actually being used and then they could adjust the format based on that. There is a case for saying that the format should not have changed without me explicitly doing so.

Customer support service by UserEcho